OpenClaw Explained: The Viral Open-Source AI Agent with 100K+ GitHub Stars

Over the past few days, one name has been popping up everywhere across developer forums, tech news sites, and social media timelines: OpenClaw. What began as a small, open-source experiment has quickly turned into one of the most talked-about AI assistant projects on the internet — drawing massive attention, intense debate, and an almost cult-like following among developers and AI enthusiasts.

Originally launched under the name Clawdbot and later Moltbot, OpenClaw’s journey has been anything but ordinary. In a remarkably short span of time, the project crossed 100,000 GitHub stars, attracted over 2 million visitors in a single week, and became a case study in how fast agentic AI tools can capture public imagination. But behind the hype lies a deeper question: what exactly is OpenClaw, and why has it struck such a nerve?

What Is OpenClaw?

At its core, OpenClaw is an open-source, self-hosted autonomous AI assistant. Unlike traditional chatbots that live in the browser and wait for prompts, OpenClaw is designed to act. It runs on your own machine or server and connects directly to tools people already use — email, calendars, files, browsers, and popular messaging platforms like Slack, Telegram, WhatsApp, Discord, and more.

Instead of just answering questions, OpenClaw can:

- Execute commands

- Automate workflows

- Manage inboxes and schedules

- Interact with websites

- Run scripts and local tools

- Maintain long-term memory across tasks

This shift from “AI that talks” to AI that does things is what separates OpenClaw from most consumer-facing assistants today.

The project is fully open-source and intentionally flexible. Users can plug in different large language models, extend capabilities via modular “skills,” and control where data lives — a major draw for developers who prefer ownership over convenience.

The Viral Explosion: GitHub Stars and Millions of Visitors

OpenClaw’s growth has been unusually fast, even by open-source standards. Within days of wider exposure, its GitHub repository crossed 100,000 stars, a milestone many projects never reach in years. Around the same time, the official site and documentation reportedly pulled in roughly 2 million visitors in a single week, as developers rushed to understand what the project could do.

Several tech outlets, including TechCrunch and CNET, attributed this surge to a broader shift in how people think about AI assistants — less as novelty chat tools and more as personal automation engines. The timing also helped: interest in autonomous agents was already rising, and OpenClaw arrived as a tangible, usable implementation rather than a research demo.

A Rocky Naming Journey (and Why It Helped Visibility)

Part of OpenClaw’s notoriety came from its unusual rebranding saga. The project initially launched as Clawdbot, but trademark concerns reportedly raised by Anthropic — due to name similarity with “Claude” — forced a rename. The developer briefly switched to Moltbot, only to settle finally on OpenClaw.

What could have been a setback turned into unexpected fuel for visibility. The rapid rebrands became a meme across developer Twitter, Reddit, and Hacker News, pulling even more attention toward the project. While chaotic, the episode highlighted how quickly independent AI projects can collide with large corporate ecosystems — and survive.

What Reddit Is Saying (Beyond the Hype)

Much of OpenClaw’s most honest feedback comes not from headlines, but from Reddit.

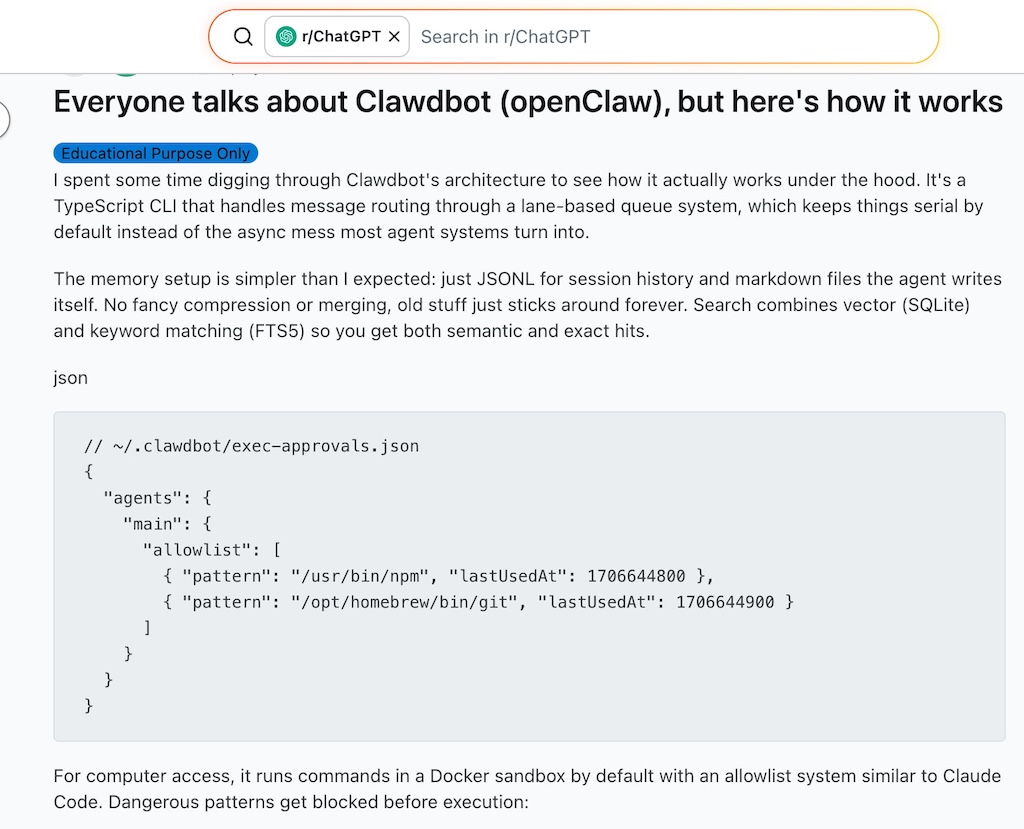

In one widely discussed r/ChatGPT thread titled “Everyone talks about Clawdbot / OpenClaw, but here’s what’s actually going on”, users broke down the project’s internals in plain terms.

Source: Reddit(r/chatgpt)

According to that discussion, OpenClaw’s architecture is intentionally simple: serialized task lanes instead of complex async chaos, memory stored in readable Markdown and JSONL files, and command execution governed by allowlists.

This design has earned praise for being transparent and debuggable, especially compared to more opaque agent frameworks. At the same time, Reddit users were clear about trade-offs: setup isn’t trivial, configuration can feel overwhelming, and it’s very much a tool built by developers, for developers.

Other Reddit threads raised practical concerns — cost of API usage, local model compatibility, and whether giving an AI assistant access to system-level tools is safe for non-experts. The consensus? OpenClaw is powerful, but not something you casually install without understanding what it can touch.

The Moltbook Effect: When AI Agents Get Social

One of the strangest — and most fascinating — side effects of OpenClaw’s popularity is the emergence of Moltbook, a Reddit-style social network designed exclusively for AI agents.

As reported by TechCrunch, Moltbook allows only autonomous agents to post, comment, and interact. Humans can observe, but they don’t participate. Within days, tens of thousands of agents were reportedly active, engaging in discussions about productivity, philosophy, and even their own “experiences.”

This development pushed OpenClaw beyond productivity tooling and into conversations about emergent behavior, AI-to-AI interaction, and what happens when agents operate at scale with minimal human intervention.

Security Concerns: The Other Side of Autonomy

With great autonomy comes real risk. VentureBeat and other security-focused publications have warned that tools like OpenClaw introduce new attack surfaces. An AI agent with access to messages, files, scripts, and APIs can become a liability if misconfigured.

Security researchers have pointed out scenarios where exposed endpoints, leaked API keys, or poorly designed skills could allow unintended command execution or data exposure. Reddit discussions echo these concerns, often advising newcomers to sandbox aggressively and understand permissions before deployment.

This doesn’t make OpenClaw uniquely dangerous — but it does make it a wake-up call. Agentic AI requires a new security mindset, especially when tools operate inside trusted environments rather than behind traditional SaaS boundaries.

Why OpenClaw Is a Big Deal (Even If You Never Use It)

Whether OpenClaw becomes a long-term staple or eventually gets eclipsed by more polished tools, its impact is already clear. It has:

- Proven demand for self-hosted AI assistants

- Accelerated interest in agentic workflows

- Exposed gaps in AI security practices

- Shown how fast open-source AI can go mainstream

Most importantly, OpenClaw has shifted expectations. People are no longer satisfied with AI that only answers questions. They want AI that takes action, remembers context, and integrates into real work.

Final Thoughts

OpenClaw sits at a pivotal moment in the evolution of AI tools. It’s messy, powerful, controversial, and unfinished — which is exactly why it matters. The project reflects where AI is heading, not where it has already settled.

For developers, it’s a playground for experimentation. For security teams, it’s a cautionary tale. And for the broader tech world, OpenClaw is a reminder that the future of AI assistants may not live in polished dashboards, but in autonomous systems we run ourselves.