How AI Is Changing Content Trust: Tools Startups Use to Verify Text and Images

By 2026 there will be a wealth of content available on the Internet. Every day, thousands and thousands of articles, videos and images are shared, reshared and published. It's great for communication but creates a new problem: how do we verify that what is being shared is true?

AI makes it easier to create text and images that are convincing. Content trust is one of the most important business challenges that startups are facing today.

Trust is not just a value of a brand. It's an essential survival strategy.

Startups that publish content quickly and without verification, but without checking it first, can accidentally spread false data. This can damage reputations, reduce customer loyalty or even cause legal risks. Startups now use verification tools instead of creating content quickly to verify what's real and what's not.

Why Content Trust Is a Startup Issue

Startups often rely on:

- Fast growth

- High content output

- Community-driven marketing

- User-generated content

- Influencer partnerships

All of these are vulnerable to misinformation.

The fallout from a startup sharing a fake photo, a misleading stat, or AI-generated material that appears authentic can be rapid. Even if it was unintentional, the public can react harshly.

Trust is no longer a “nice to have.” It’s a key asset.

The Problem: AI Makes Fake Content Look Real

AI can now generate:

- Human-like writing

- Realistic images

- Voice clones

- Deepfake videos

- Fake documents

This creates a new reality: false content can be created at scale.

AI content is also capable of being emotionally persuasive. It is designed to appear polished, credible and convincing.

That means people don’t always question it. And when they don’t, misinformation spreads.

The Tools Startups Use to Verify Text

Text verification has evolved quickly. Startups are using a combination of tools to protect their content integrity.

The goal is to ensure content is:

- accurate

- original

- reliable

- trustworthy

1. Plagiarism and Originality Checkers

These tools compare text against massive databases to find:

- copied content

- slightly modified paragraphs

- duplicate sections

- uncredited sources

For startups, plagiarism isn’t just an ethical issue—it’s a business risk.

It can damage reputation and create legal issues. Startups often use these tools for:

- blog posts

- product descriptions

- press releases

- research reports

2. AI Writing Detection Tools

AI writing detection tools analyze patterns in text to determine whether it was generated by AI.

They look for:

- repetitive sentence structures

- unusual word patterns

- “too perfect” grammar

- lack of real-world context

The tools aren't perfect but they can help teams identify the content that needs to be examined more closely.

Common use cases include:

- user-generated content moderation

- content moderation for platforms

- internal quality control

- preventing fake reviews

3. Fact-Checking and Source Verification

This is where startups ensure that content is true—not just well-written.

Tools in this category help teams:

- verify quotes

- confirm dates and facts

- cross-check sources

- detect outdated or misleading information

Even well-written content can be wrong. And wrong content can be more harmful than no content at all.

The Tools Startups Use to Verify Images

Images carry emotional weight. A fake image can create outrage, manipulate opinions, or cause a brand crisis.

Here are the tools startups use to verify images.

1. Reverse Image Search

Reverse image search helps determine:

- whether the image exists online already

- whether it was taken from another source

- whether it has been altered

This is a basic but powerful tool for verifying image origin.

2. Metadata Analysis

Most images include metadata (EXIF data), which can reveal:

- camera model

- date/time

- GPS location

- editing software used

Metadata helps determine if an image has been altered or not.

However, metadata can be stripped or edited. So this method is not always reliable alone.

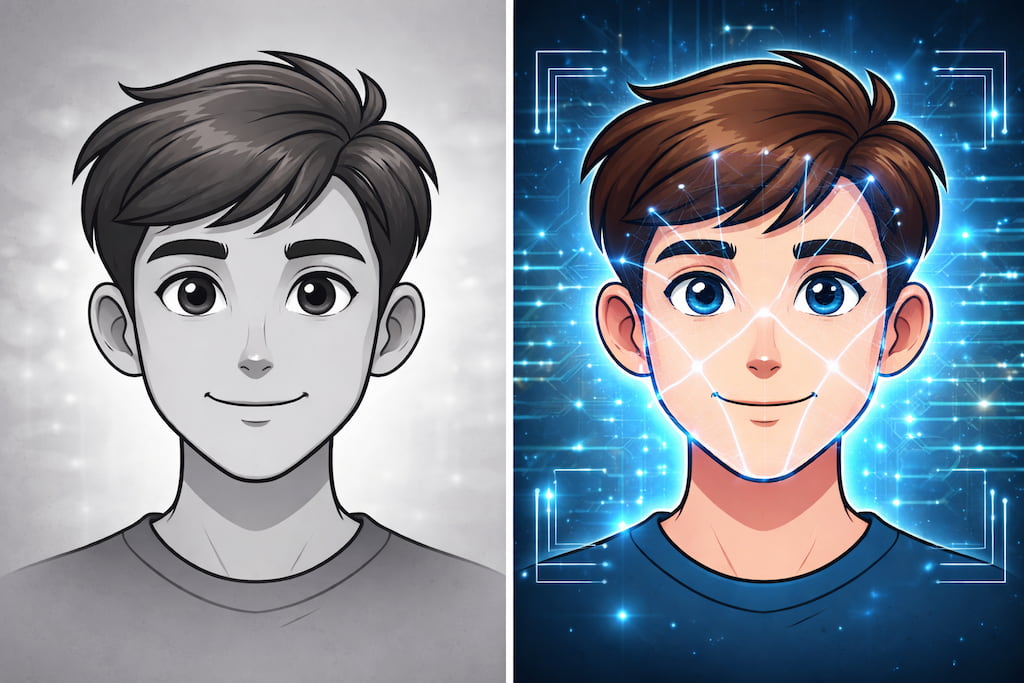

3. AI Image Detectors (The Real Game Changer)

AI image detectors are quickly becoming essential for startups.

They don’t “guess” by looking at the image. They analyze patterns of technical data that are normally invisible to humans.

What AI Image Detectors Look For

AI-generated images often contain subtle anomalies, such as:

- unusual texture patterns

- inconsistent lighting

- repetitive details

- unnatural shadows

- incorrect reflections

These are artifacts of how AI generates images.

AI image detectors use machine learning models trained on thousands of real and AI-generated images. They compare the image against known patterns and provide a probability score indicating whether the image is AI-generated or manipulated.

Why AI Image Detectors Matter

Startups use AI image detectors to:

- prevent brand damage from fake visuals

- detect manipulated product photos

- verify influencer content

- protect user-generated content platforms

- reduce misinformation in marketing

In other words, they protect trust.

Building a Verification Workflow That Works

It is not the goal to be paranoid. The goal is to create a system which protects your company, while still allowing for speed and creativity.

Startups usually follow a layered approach:

A Simple Verification Workflow

Step 1: Automated Screening

Tools automatically check text and images for:

- plagiarism

- AI generation

- metadata inconsistencies

Step 2: Manual Review

Human moderators review flagged content.

Step 3: Source Confirmation

If needed, teams verify sources through:

- trusted databases

- verified references

- direct confirmation

Step 4: Final Approval

Content is approved only after it passes all checks.

This process keeps the workflow fast and scalable while protecting trust.

The New Standard: Trust as a Product Feature

In today’s market, trust is a differentiator. Customers choose brands they believe are honest and transparent.

For startups, that means:

- being accurate

- being consistent

- being accountable

- being responsible

AI tools can help, but trust still comes from how teams use those tools.

The Human Element: Why Verification Needs People, Not Just Tools

Even the most sophisticated AI-based detection tools are not 100% accurate. Cutting-edge models can create the most convincing fakes, as they are able bypass detection systems. Startups must combine human judgement with technology to ensure accuracy. Human reviewers bring context, ethics, and common sense that AI cannot duplicate.

This is especially important when dealing with sensitive issues such as politics, health, finance, or social concerns. In these situations, a single mistake can result in a public relations catastrophe or even a legal exposure. Many startups, no matter how small, have dedicated teams for content integrity. These teams are a last check to ensure that the content is not only well-polished but also ethical.

Transparency: The New Competitive Advantage

Verification can be used to the advantage of startups. Transparency can be used to build trust between companies and their users. It could be as simple as publishing a policy for verification, labeling AI-generated content clearly, or providing sources and statistics.

Audiences are more likely engage, recommend, and share when they know that a company is serious about accuracy. Trust becomes a differentiator--something that sets startups apart from competitors who prioritize speed over truth.

The Bottom Line

AI has changed content creation forever. It has also changed content verification.

AI-based tools are now used by startups to protect their reputation and detect fake images and text. Verification is more than a technical problem. It's also a priority.

Trust is the new currency. And in a world where anyone can generate anything, the ability to verify content quickly and accurately is one of the most valuable skills a startup can build.